In the last post, I talked about a project I am involved in right now to deploy NetApp storage alongside EMC for SAN and NAS. Today, I’m going to talk about my first impressions of the NetApp during deployment and initial configuration.

First Impressions

I’m going to be pretty blunt — I have been working with EMC hardware and software for a while now, and I’m generally happy with the usability of their GUIs. Over that time, I’ve used several major revisions of Navisphere Manager and Celerra Manager, and even more minor revisions, and I’ve never actually found a UI bug. To be clear, EMC, IBM, NetApp, HDS, and every other vendor have bugs in their software, and they all do what they can to find and fix them quickly, but I just haven’t personally seen one in the EMC UIs despite using every feature offered by those systems. (I have come across bugs in the firmware)

Contrast that with the first day using the new NetApp, running the latest 7.3.1.1L1 code, where we discovered a UI problem in the first 10 minutes. When attempting to add disks to an aggregate in FilerView, we could not select FC disk to add. We could, however, add SATA disk to the FC aggregate. The only way to get around the issue was to use the CLI via SSH. As I mentioned in my previous post, our NetApp is actually an IBM nSeries, and IBM claims they perform additional QC before their customers get new NetApp code.

Shortly after that, we found a second UI issue in FilerView. When creating a new Initiator group, FilerView populates the initiator list with the WWNs that have logged in to it. Auto-populating is nice but the problem is that FilerView was incorrectly parsing the WWN of the server HBAs and populating the list with NodeWWNs rather than PortWWNs. We spent several hours trying to figure out why the ESX servers didn’t see any LUNs before we realized that the WWNs in the Initiator group were incorrect. Editing the 2nd digit on each one fixed the problem.

I find it interesting that these issues, which seemed easy to discover, made it through the QC process of two organizations. ONTap 7.3.2RC1 is available now, but I don’t know if these issues were addressed.

Manageability

As far as FilerView goes, it is generally easy to use once you know how NetApp systems are provisioned. The biggest drawback in an HA-Filer setup is the fact you have to open FilerView separately for each Filer and configure each one as a separate storage system. Two HA-Filer pairs? Four FilerView windows. If you include the initial launch page that comes up before you get to the actual FilerView window, you double the number of browser windows open to manage your systems. NetApp likes to mention that they have unified management for NAS and SAN where EMC has two separate platforms, each with their own management tools. EMC treats the two storage processors (SPs) in a Clariion in a much more unified manner, and provisioning is done against the entire Clariion, not per SP. Further, Navisphere can manage many Clariions in the same UI. Celerra Manager acts similarly for EMC NAS. Six of one, half a dozen of the other some say, except that I find that I generally provision NAS storage and SAN storage at different times, and I’d rather have all of the controllers/filers in the same window than NAS and SAN in the same window. Just my preference.

I should mention, NetApp recently released System Manager 1.0 as a free download. This new admin tool does present all of the controllers in one view and may end up being a much better tool than FilerView. For now, it’s missing too many features to be used 100% of the time and it’s Windows only since it’s based on MMC. Which brings me to my other problem with managing the NetApp. Neither FilerView nor System Manager can actually do everything you might need to do, and that means you end up in the CLI, FREQUENTLY. I’m comfortable with CLIs and they are extremely powerful for troubleshooting problems, and especially for scripting batch changes, but I don’t like to be forced into the CLI for general administration. GUI based management helps prevent possibly crippling typos and can make visualizing your environment easier. During deployment, we kept going back and forth between FilerView and CLI to configure different things. Further, since we were using MultiStore (vFilers) for CIFS shares and disaster recovery, we were stuck in the CLI almost entirely because System Manager can’t even see vFilers, and FilerView can only create them and attach volumes.

Had I not been managing Celerra and Clariion for so long, I probably wouldn’t have noticed the above problems. After several years of configuring CIFS, NFS, iSCSI, Virtual DataMovers, IP Interfaces, Snapshots, Replication, and DR Failover, etc. on Celerra, as well as literally thousands of LUNs for hundreds of servers on Clariion, I don’t recall EVER being forced to use the CLI. CelerraCLI and NaviCLI are very powerful, and I have written many scripts leveraging them, and I’ll use CLI when troubleshooting an issue. But for every single feature I’ve ever used on the Celerra or Clarrion, I was able to completely configure from start to finish using the GUI. Installing a Celerra from scratch even uses a GUI based installation wizard. Comparing Clariion Storage Groups with NetApp Initiator groups and LUN maps isn’t even fair. For MS Exchange, I mapped about 50 LUNs to the ESX cluster, which took about 30 minutes in FilerView. On the Clariion, the same operation is done by just editing the Storage Group and checking each LUN, taking only a couple minutes for the entire process.

Now, all of the above commentary has to do with the management tools, UIs, and to some degree personal preferences, and does not have any bearing on the equipment or underlying functionality. There are, of course, optional management tools like Operations Manager, Provisioning Manager, and Protection Manager available from NetApp, just as there is Control Center from EMC (which incidentally can monitor the NetApp) or Command Central from Symantec. Depending on your overall needs, you may want to look at optional management tools; or, FilerView may be perfectly fine.

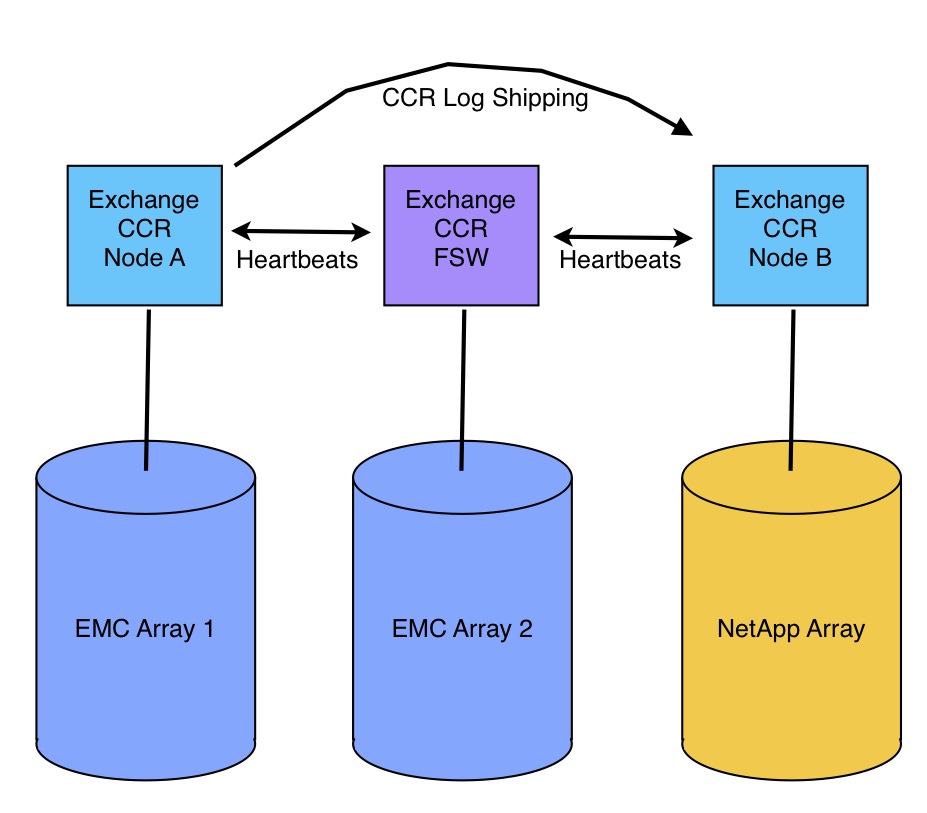

In the next post, I’ll get into more specifics about how the Exchange 2007 CCR cluster turned out in this new environment, along with some notes on making CCR truly redundant. I’ve also been working on the NAS side of the project, so I’ll also post about that some time soon.

Like this:

Like Loading...