I started this post before I started working for EMC and got sidetracked with other topics. Recent discussions I’ve had with people have got me thinking more about orchestration of data protection, replication, and disaster recovery, so it was time to finish this one up…

———————————–

Prior to me coming to work for EMC, I was working on a project to leverage NetApp and EMC storage simultaneously for redundancy. I had a chance to put various tools from EMC and NetApp into production and have been able to make some observations with respect to some of the differences. This is a follow up my previous NetApp and EMC posts…

NetApp and EMC: Real world comparisons

NetApp and EMC: Startup and First Impressions

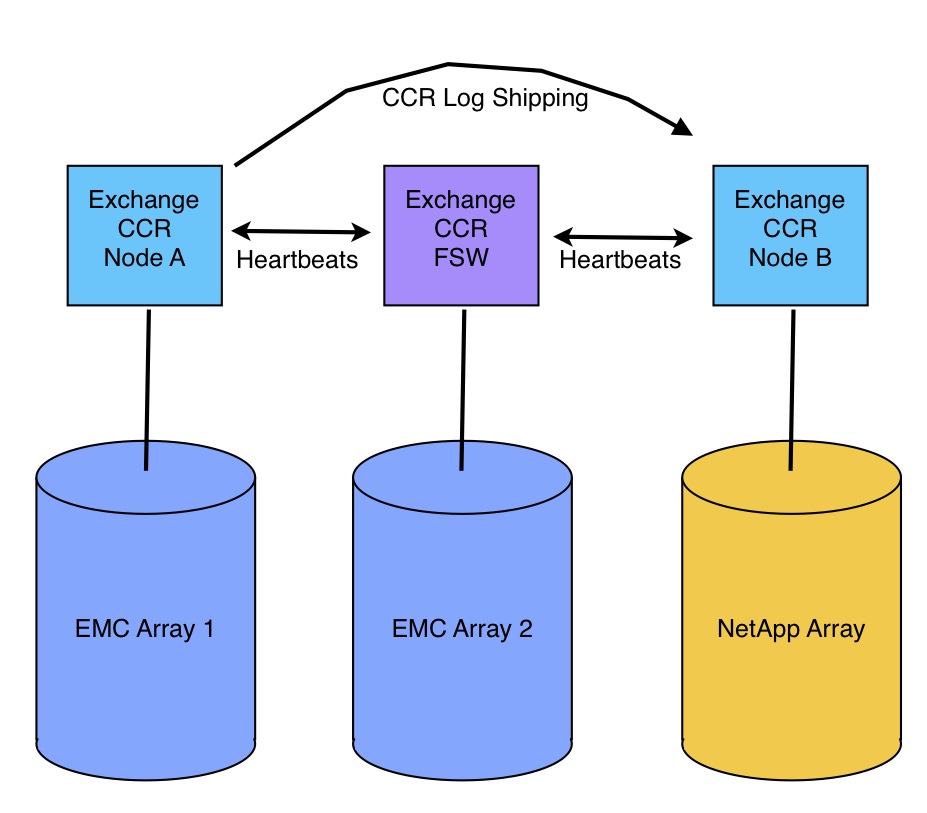

NetApp and EMC: ESX and Exchange 2007 CCR

NetApp and EMC: Exchange 2007 Replication

Specifically this post is a comparison between NetApp SnapManager 5.x and EMC Replication Manager 5.x. First, here’s a quick background on both tools based on my personal experience using them.

Description

EMC Replication Manager (RM) is a single application that runs on a dedicated “Replication Manager Server.” RM agents are deployed to the hosts of applications that will be replicated. RM supports local and remote replication features in EMC’s Clariion storage array, Celerra Unified NAS, Symmetrix DMX/V-Max, Invista, and RecoverPoint products. With a single interface, Replication Manager lets you schedule, modify, and monitor snapshot, clone, and replication jobs for Exchange, SQL, Oracle, Sharepoint, VMWare, Hyper-V, etc. RM supports Role-Based authentication so application owners can have access to jobs for their own applications for monitoring and managing replication. RM can manage jobs across all of the supported applications, array types, and replication technologies simultaneously. RM is licensed by storage array type and host count. No specific license is required to support the various applications.

NetApp SnapManager is actually a series of applications designed for each application that NetApp supports. There are versions of SnapManager for Exchange, SQL, Sharepoint, SAP, Oracle, VMWare, and Hyper-V. The SnapManager application is installed on each host of an application that will be replicated, and jobs are scheduled on each specific host using Windows Task Scheduler. Each version of SnapManager is licensed by application and host count. I believe you can also license SnapManager per-array instead of per-host which could make financial sense if you have lots of hosts.

Commonality

EMC Replication Manager and NetApp SnapManager products tackle the same customer problem–provide guaranteed recoverability of an application, in the primary or a secondary datacenter, using array-based replication technologies. Both products leverage array-based snapshot and replication technology while layering application-consistency intelligence to perform their duties. In general, they automate local and remote protection of data. Both applications have extensive CLI support for those that want that.

Differences

- Deployment

- EMC RM – Replication Manager is a client-server application installed on a control server. Agents are deployed to the protected servers.

- NetApp SM – SnapManager is several applications that are installed directly on the servers that host applications being protected.

- Job Management

- EMC RM – All job creation, management, and monitoring is done from the central GUI. Replication Manager has a Java based GUI.

- NetApp SM – Job creation and monitoring is done via the SnapManager GUI on the server being protected. SnapManager utilizes an MMC based GUI.

- Job Scheduling

- EMC RM – Replication Manager has a central scheduler built-in to the product that runs on the RM Server. Jobs are initiated and controlled by the RM Server, the agent on the protected server performs necessary tasks as required.

- NetApp SM – SnapManager jobs are scheduled with Windows Task Scheduler after creation. The SnapManager GUI creates the initial scheduled task when a job is created through the wizard. Modifications are made by editing the scheduled task in Windows task scheduler.

So while the tools essentially perform the same function, you can see that there are clear architectural differences, and that’s where the rubber meets the road. Being a centrally managed client-server application, EMC Replication Manager has advantages for many customers.

Simple Comparison Example: Exchange 2007 CCR cluster

(snapshot and replicate one of the two copies of Exchange data)

With NetApp SnapManager, the application is installed on both cluster nodes, then an administrator must log on to the console on the node that hosts the copy you want to replicate, and create two jobs which run on the same schedule. Job A is configured to run when the node is the active node, Job B is configured to run when the node is passive. Due to some of the differences in the settings, I was unable to configure a single job that ran successfully regardless of whether the node was active or passive. If you want to modify the settings, you either have to edit the command line options in the Scheduled Task, or create a new job from scratch and delete the old one.

With EMC Replication Manager, you deploy the agent to both cluster nodes, then in the RM GUI, create a job against the cluster virtual name, not the individual node. You define which server you want the job to run on in the cluster, and whether the job should run when the node is passive, active, or both. All logs, monitoring, and scheduling is done in the same RM GUI, even if you have 50 Exchange clusters, or SQL and Oracle for that matter. Modifying the job is done by right-clicking on the job and editing the properties. Modifying the schedule is done in the same way.

So as the number of servers and clusters increases in your environment, having a central UI to manage and monitor all jobs across the enterprise really helps. But here’s where having a centrally managed application really shines…

But what if it gets complicated?

Let’s say you have a multi-tier application like IBM FileNet, EMC Documentum, or OpenText and you need to replicate multiple servers, multiple databases, and multiple file systems that are all related to that single application. Not only does EMC Replication Manager support SQL and Filesystems in the same GUI, you can tie the jobs together and make them dependent on each other for both failure reporting and scheduling. For example, you can snapshot a database and a filesystem, then replicate both of them without worrying about how long the first job takes to complete. Jobs can start other jobs on completely independent systems as necessary.

Without this job dependence functionality, you’d generally have to create scheduled tasks on each server and have dependent jobs start with a delay that is long enough to allow the first job to complete while as short as possible to prevent the two parts of the application from getting too far out of sync. Some times the first job takes longer than usual causing subsequent jobs to complete incorrectly. This is where Replication Manager shows it’s muscle with it’s ability to orchestrate complex data protection strategies, across the entire enterprise, with your choice of protection technologies (CDP, Snapshot, Clone, Bulk Copy, Async, Sync) from a single central user interface.