Exchange Replication

Building on the redundant storage project, we also wanted to replicate Exchange to a remote datacenter for disaster recovery purposes. We’ve been using EMC CLARiiON MirrorView/A and Replication Manager for various applications up to now and decided we’d use NetApp/SnapMirror for Exchange to leverage the additional hardware as well as a way to evaluate NetApp’s replication functionality vs EMC’s.

On EMC Clariion storage, there are a couple choices for replicating applications like Exchange.

1.) Use MirrorView/Async with Consistency Groups to replicate Exchange databases in a crash-consistent state.

2.) Use EMC Replication Manager with Snapview snapshots and SANCopy/Incremental to update the remote site copy.

Similar to EMC’s Replication Manager, NetApp has SnapManager for various applications, which coordinates snapshots, and replica updates on a NetApp filer.

Whether using EMC RM or NetApp SM, software must be installed on all nodes in the Exchange cluster to quiesce the databases and initiate updates. The advantage of Consistency groups with MirrorView is that no software needs to be installed in the host; all work is performed within the storage array. The advantage of RM and SM/E is that database consistency is verified on each update and the software can coordinate restoring data to the same or alternate servers, which must be done manually if using MirrorView.

NetApp doesn’t support consistent snapshots across multiple volumes so the only option on a Filer is to use SnapManager for Exchange to coordinate snapshots and SnapMirror updates.

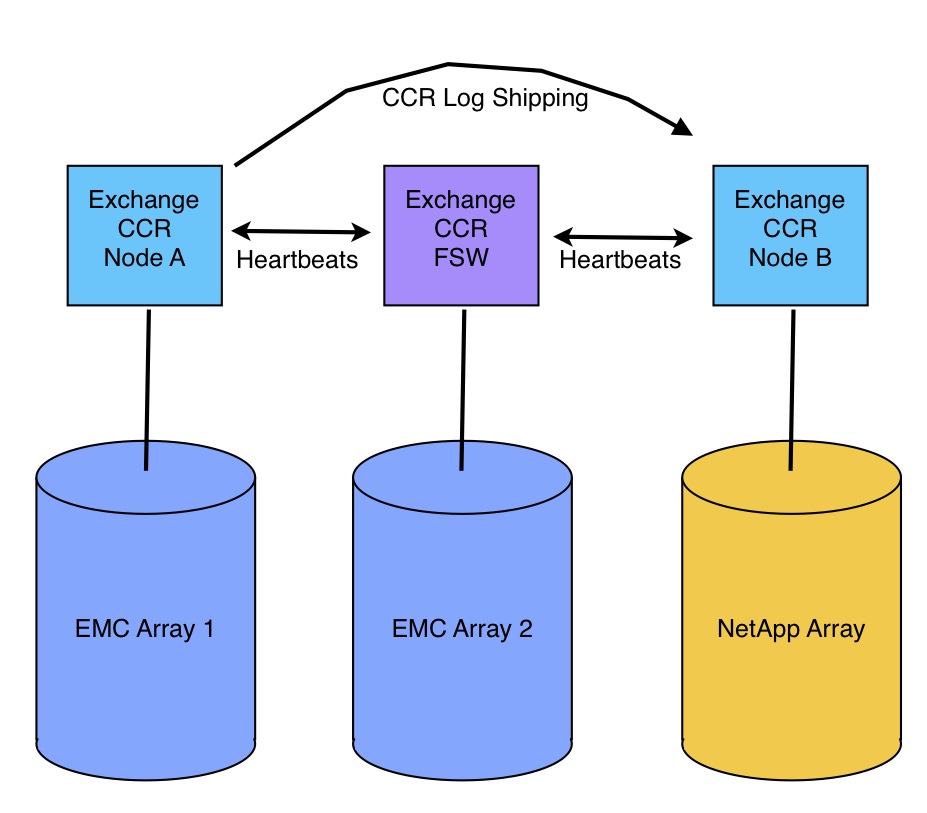

Our first attempt configuring SnapManager for Exchange actually failed when we ran into a compatibility issue with SnapDrive. SnapManager depends on SnapDrive for mapping LUNs between the host and filer, and to communicate with the filer to create snapshots, etc. We’d discussed our environment with NetApp and IBM ahead of time, specifically that we have Exchange CCR running on VMWare, with FiberChannel LUNs and everyone agreed that SnapDrive supports VMWare, Exchange, Microsoft Clustering, and VMWare Raw Devices. It turns out that SnapDrive 6 DOES support all of this, but not all at the same time. Specifically, MSCS clustering is not supported with FC Raw Devices on VMWare. In comparison, EMC’s Replication Manager has supported this configuration for quite a while. After further discussion NetApp confirmed that our environment was not supported in the current version of SnapDrive (6.0.2) and that SnapDrive 6.2, which was still in Beta, would resolve the issue.

Fast forward a couple months, SnapDrive 6.2 has been released and it does indeed support our environment so we’ve finally installed and configured SnapDrive and SnapManager. We’ve dedicated the EMC side of the Exchange environment for the active nodes and the IBM for the passive nodes. SnapManager snapshots the passive node databases, mounts them to run database verification, then updates the remote mirror using SnapMirror.

While SnapManager does do exactly what we need it to do, my experience with it hasn’t been great so far… First, SnapManager relies on Windows Task Scheduler to run scheduled jobs, which has been causing issues. The job will run on its schedule for a day, then stop after which the task must be edited to make it run again. This happens in the lab and on both of our production Exchange clusters. I also found a blog post about this same issue from someone else.

The other issue right now is that database verification takes a long time, due to the slow speed of ESEUTIL itself. A single update on one node takes about 4 hours (for about 1TB of Exchange data) so we haven’t been able to achieve our goal of a 2-hour replication RPO. IBM will be onsite next week to review our status and discuss any options. An update on this will follow once we find a solution to both issues. In the meantime I will post a comparison of replication tools between EMC and NetApp soon.