Sizing your Building Block <- Part 5 -> I’m too small for Building Blocks

You may have noticed in the last installment that I did not include any FibreChannel switches in the example BOM. There are essentially three ways to deal with the SAN connectivity in a Building Block and there are advantages as well as disadvantages to each. (Note: this applies to iSCSI as well)

1.) Use switches that already exist in your datacenter: You can attach each storage array and each server back to a common fabric that you already have (or that you build as part of the project) and zone each of the Building Block’s servers to their respective storage array.

- Advantages:

- Leverage any existing fabric equipment to reduce costs and centralize management

- Allow for additional servers to be added to each Building Block in the future

- Allow for presenting storage from one Building Block to servers in a different Building Block (useful for migrations)

- Disadvantages:

- Increases complexity – Requires you to configure zoning within each Building Block during deployment

- Increases chances for human error that could cause an outage – Accidentally deleting entire Zonesets or VSANs is not as uncommon as you might think

- Reduces the availability isolation between Building Blocks – The fabric itself becomes a point-of-failure common to all Building Blocks.

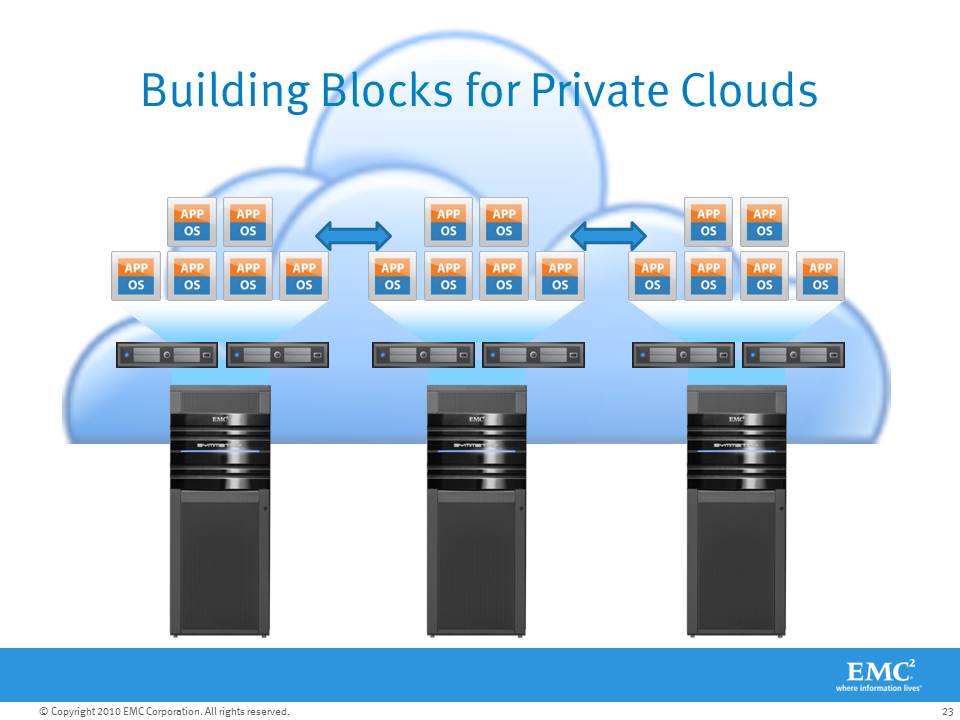

2.) Deploy a dedicated fabric within each Building Block: Since each Building Block has a known quantity of storage and server ports, you can easily add a dual-switch/fabric into the design. In our example of 9 hosts you’d need a total of 18 ports for hosts and maybe 8 ports for the storage array for a combined total of 26 switch ports. Two 16-port switches can easily accommodate that requirement.

- Advantages:

- Depending on the switches used, it could allow for additional servers in each Building Block in the future

- Allow for presenting storage from one Building Block to servers in a different building block (useful for migrations) by connecting ISLs between Building Blocks

- Maintains the Building Block isolation by not sharing the fabric switches across Building Blocks.

- Disadvantages:

- Increases complexity – Requires you to configure zoning within each Building Block during deployment

- Increases chances for human error that could cause an outage – Again, accidentally deleting entire Zonesets or VSANs is not as uncommon as you might think

3.) Dispense with the fabric entirely: Since Building Blocks are relatively small, resulting in fewer total initiator/target pairs, it’s possible in some cases to directly attach all of the hosts to the storage array. In our example, the nine hosts need eighteen ports and the VNX5700 supports up to twenty four FC ports. This means you can directly attach all of the hosts to the array and still have six remaining ports on the array for replication, etc. Different arrays from EMC as well as other vendors will have various limits on the number of FC ports supported. Also, not all vendors support direct attached hosts so you’ll need to check that with your storage vendor of choice to be sure.

- Advantages:

- Maintains the Building Block isolation by not sharing the fabric switches across Building Blocks.

- Simplifies deployment by eliminating the need to do any zoning at all and effectively eliminates any port queue limits (HBA elevator depth settings)

- Simplifies troubleshooting by eliminating the fabric (buffer to buffer credits, bandwidth, port errors, etc) from the IO path.

- Disadvantages:

- Limits the number of hosts per Building Block by the maximum number of ports supported by the storage array.

- More difficult to non-disruptively migrate VMs between Building Blocks since storage cannot be shared across. (If all Building Blocks are in the same Virtual Data Center in VMWare vSphere, you can still live-migrate VMs via the IP network between Building Blocks using Storage vMotion)

If you decide that the host count limit is okay, and either non-disruptive migration between Building Blocks is unnecessary or Storage vMotion will work for you, then eliminating the fabric can reduce cost and complexity, while improving overall availability and time to deploy. If you need the flexibility of a fabric, I personally like using dedicated switches in each building block. Cisco and Brocade both offer 1U switches with up to 48 ports per switch that will work quite well. Always deploy two switches (as two fabrics) in each Building Block for redundancy.

Okay, so you’ve managed to calculate the size of your environment, how much time it will take you to virtualize it, the number of Building Blocks you need, and the specifications for each Building Block, including whether you need a fabric. Now you can submit your budget, get your final quotes, and place orders. Once the equipment arrives it’s time to implement the solution.

When your first Building Block arrives, it would be a valuable use of time to learn how to script the configuration for each component in the Building Block. An EMC VNX array can be completely configured using Naviseccli or PowerShell, from the Storage Pool and LUN provisioning to initiator registration and Host/LUN masking. VMWare vSphere can similarly be configured using scripts or PowerShell. If you take the time to develop and test your scripts against your first Building Block, then you can use those scripts to quickly stand up each additional Building Block you deploy. Since future Building Blocks will be nearly identical, if not entirely identical, the scripts can speed your deployment time immensely.

EMC Navisphere/Unisphere CLI (for VNX) is documented fully in the VNX Command Line Interface (CLI) Reference for Block 1.0 A02. This document is available on EMC PowerLink at the following location:

Home > Support > Technical Documentation and Advisories > Software ~ J-O ~ Documentation > Navisphere Management Suite > Maintenance/AdministrationBe sure to leverage any storage vendor plug-ins available to you for your chosen hypervisor (VMWare, Hyper-V, etc) to improve visibility up and down the layers and reduce the number of management tools you need to use on a daily basis.

For example, EMC Unisphere Manager, the array management UI running on the VNX storage array, includes built-in integration with VMWare and other host operating systems. Unisphere Manager displays the VMFS datastores, RDMs, and VMs that are running on each LUN and a storage administrator can quickly search for VM names to help with management and/or troubleshooting tasks.

EMC also provides free downloadable plug-ins for VMWare vSphere and Hyper-V so server administrators can see what storage arrays and LUNs are behind their VMs and datastores. The plug-ins also allow administrators to provision new LUNs from the storage array through the plug-ins without needing access to the array management tools.

Depending on which storage vendor you choose, if you build a fabric-less Building Block, you may be able to do all of your server and storage administration from vCenter if you leverage the free plug-ins.

Sizing your Building Block <- Part 5 -> I’m too small for Building Blocks

Like this:

Like Loading...