So you picked up a Nest at the store, or online, because you realized (or thought maybe) that you could save some money on your heating or cooling bills, and/or the possibility of remote controlling your home HVAC from your phone was pretty slick.

Now I won’t really spend much time on the energy/cost savings (or possibly lack thereof) related to using a Nest vs. any other programmable thermostat but suffice it to say I’m dubious as to whether Nest will actually save me any money in its lifetime. But that’s not why I got it.. Being able to remotely set the furnace to away and bring it back to life as needed from my mobile phone is interesting enough to me. Combined that with energy consumption statistics and I can see at least enough benefit to warrant trying it out.

Further, I am supporting a startup project called Ecovent which integrates with Nest and will allow individual control of the temperature in each room of our house. No more cold office with an overheated living room…

Anyway, I picked up the Nest while upgrading my wife’s mobile phone because I was able to bundle my items together for a little discount. At home I spent just under 10 minutes installing it, it really IS easy! Perfect, looks great, and seems to work just fine. It was evening so I set the temp to 60˚F and left it alone for the night.

The next morning I set the furnace to 69˚F from my phone before I got out of bed and started getting ready for the day. From 7am until about 10am the furnace turned on and off, on and off, repeatedly, but the house never got any warmer. The Nest itself seemed fine with no errors on screen. I turned the knob up a bit and it said it was heating but still same result. I gave up on it for most of the day thinking maybe it was learning how quickly or slowly the furnace raises the temperature. WRONG!

Later in the evening it was still not working so I Google’d around a bit (yes I use Google so I can use the big G) for this issue and found a few notes in discussion forums. I didn’t find anything useful on Nest’s website (although I searched today, and found this KB article) itself regarding this issue, and calling customer support is always my last resort because I’ve found that most of the time customer support organizations don’t know their own product much better than I can figure out on my own with the Internet at my disposal.

What I did find on the discussion forums indicated that the problem was that there wasn’t enough power available in the control circuit and/or board to fire up the gas burners. And the furnace is designed to shut down the fan and heat cycle after two minutes if the burners haven’t ignited. I also saw several Nest owners comment that they had to call out HVAC repair technicians to figure out what the problem was, presumably at a fairly hefty cost. The good news is that I was able to determine the cause and fix the problem myself, and I’ll describe that here. It’s quite simple, however the caveat is that your house may not have the thermostat wiring in the walls that you need in order to fix it, which means running a new wire, decidedly more involved than if you already have the wiring in place.

First, the ultimate issue is that the Nest consumes more power than a typical thermostat. It has a color screen with backlighting, an actual CPU running an operating system, and a WiFi radio. It also has a rechargeable battery embedded to keep it running when you remove it from its wall base, or when the power is out. The power to run the Nest AND charge the battery comes from the 24VAC Control board in the furnace. Since the Nest uses more current (amperage) than normal and that current comes from the same power source as the current required to turn on relays for the fan and ignite the burners, open gas valves, this is where we get into problems.

The Sequence goes like this…

- The Nest is using power all the time

- If the battery is still charging for some reason it’s using even more power

- At some point Nest decides it’s too cold and sends the Heat signal to the furnace, sending this signal takes some more power

- After the furnace fan has been running for a few seconds it’s time to ignite the burner. This takes a bit more power (close relay to heat up igniter, close relay to open gas valves)

- But now the power in the circuits going through the Nest doesn’t have enough current left to do this, and the voltage has dropped as a result so the relays don’t actually close… and the burners never get gas and/or the igniter doesn’t heat up.

- Two minutes pass and the furnace senses that the burners still aren’t lit and shuts down.

- Lather, Rinse, Repeat

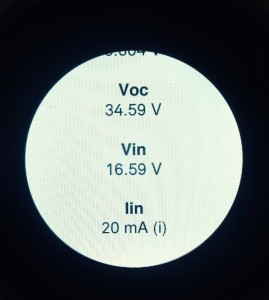

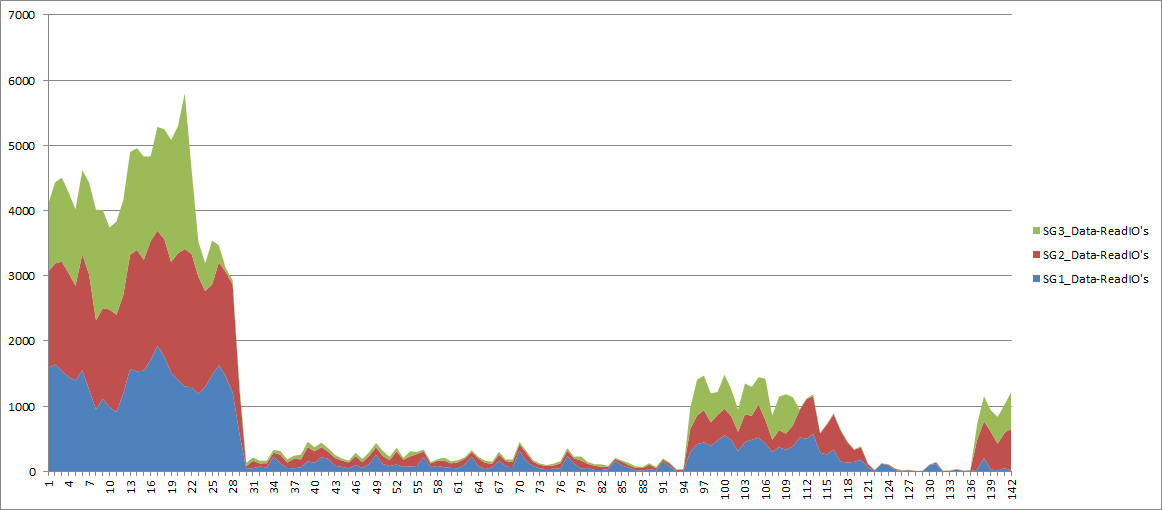

You can determine very quickly with Nest if this is going on by looking at the technical data screen..

Notice the Voc and Vin are wildly different.. this means that the AC sine-wave is fluctuating, ie: voltage is dropping. And the Lin is a current measurement showing 20mA.. According to online discussions this should be around 100ma.

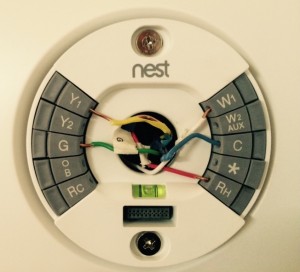

The fix for this is to add power. The most common method of doing that is to connect the blue “C” common wire between the furnace and the Nest. This makes it so that the Nest doesn’t steal/rob it’s power from the same lines that are used to control the heat and fan.

You will notice I already had an extra blue wire in the wall, but it wasn’t in use, so I connected it at both ends.

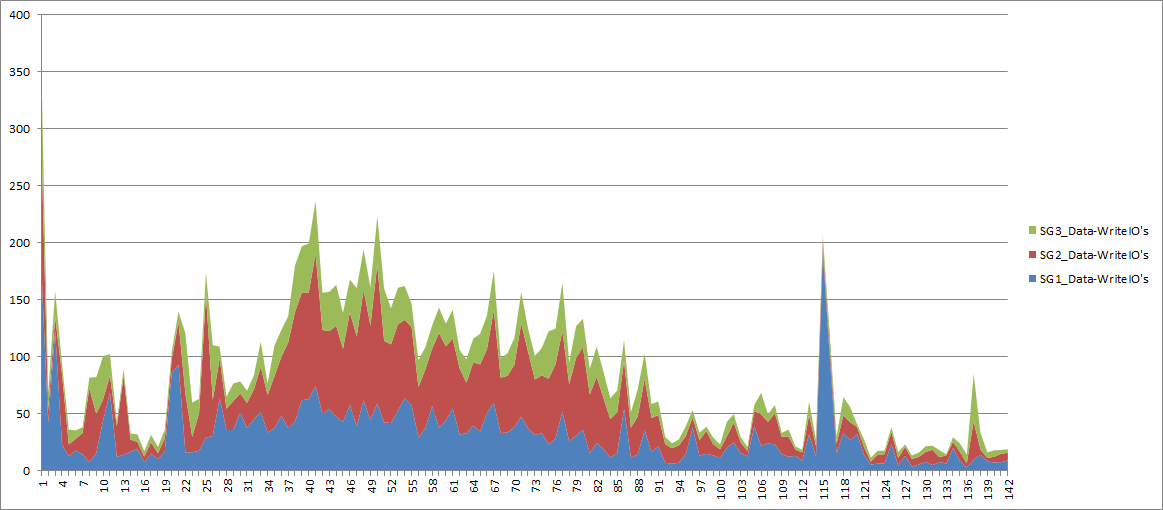

Now look at the Voc and Vin and Lin values..

The Voc and Vin are very close, so the AC sine-wave is stable and Lin is 100mA.. This is how it should be and now the furnace works perfectly.

The Voc and Vin are very close, so the AC sine-wave is stable and Lin is 100mA.. This is how it should be and now the furnace works perfectly.

So hopefully if you run into this, now you know how to resolve it. Unfortunately, if you don’t have a spare wire you are going to have to run a new wire through the wall, which will be somewhat, or very, difficult depending on your home.

Being very new, my Nest is a Gen 2 device, and some of the discussions indicated that the Gen 1 devices did not originally have this problem, and then following a software upgrade sometime in the recent past the problem started occurring. The fix was the same.

After this experience I sent some feedback to Nest about this.

- It seems common enough of a problem that it should be mentioned in the install guide. Common issues and their solutions should be readily available to self-install homeowners.

- It also seems like the Nest software could very easily detect this issue. It already monitors the Voc, Vin, and Lin values obviously, and it knows how often the furnace is cycling. It would take very little code to detect the combination of factors and display an alert on the screen and an iPhone notification that there is a power issue, with a knowledge-base article # referenced to read about it. The Nest doesn’t do this so unless you are observant or it’s really cold outside it could linger for days or weeks without you realizing it. And you will find out when it’s really cold, the furnace won’t heat, and you won’t know why.

Otherwise, I think the Nest is pretty slick and I’ll be monitoring to see how it affects my energy bill, if at all.

Footnote: You can type a ˚ on Mac OS X with Option-K or a ° with Option-Shift-8