I have a customer who just recently upgraded their EMC Celerra NS480 Unified Storage Array (based on Clariion CX4-480) to FLARE30 and enabled FASTCache across the array, as well as FASTVP automated tiering for a large amount of their block data. Now that it’s been configured and the customer has performed a large amount of non-disruptive migrations of data from older RAID groups and VP pools into the newer FASTVP pool, including thick-to-thin conversions, I was able to get some performance data from their array and thought I’d share these results.

This is Real-World data

This is NOT some edge case where the customer’s workload is perfect for FASTCache and FASTVP and it’s also NOT a crazy configuration that would cost an arm and a leg. This is a real production system running in a customer datacenter, with a few EFDs split between FASTCache and FASTVP and some SATA to augment capacity in the pool for their existing FC based LUNS. These are REAL results that show how FASTVP has distributed the IO workload across all available disks and how a relatively small amount of FASTCache is absorbing a decent percentage of the total array workload.

This NS480 array has nearly 480 drives in total and has approximately 28TB of block data (I only counted consumed data on the thin LUNs) and about 100TB of NAS data. Out of the 28TB of block LUNs, 20TB is in Virtual Pools, 14TB of which is in a single FASTVP Pool. This array supports the customers’ ERP application, entire VMWare environment, SQL databases, and NAS shares simultaneously.

In this case FASTCache has been configured with just 183GB of usable capacity (4 x 100GB EFD disks) for the entire storage array (128TB of data) and is enabled for all LUNs and Pools. The graphs here are from a 4 hour window of time after the very FIRST FASTVP re-allocation completed using only about 1 days’ worth of statistics. Subsequent re-allocations in the FASTVP pool will tune the array even more.

FASTCache

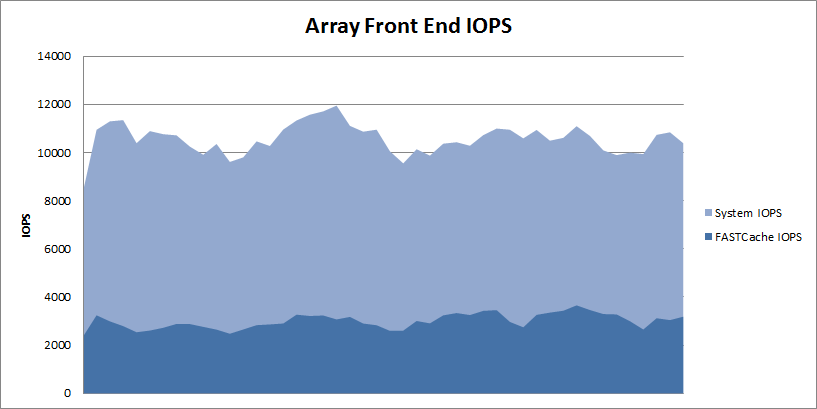

First, let’s take a look at the array as a whole, here you can see that the array is processing approximately ~10,000 IOPS through the entire interval.

FASTCache is handling about 25% of the entire workload with just 4 disks. I didn’t graph it here but the total array IO Response time through this window is averaging 2.5 ms. The pools and RAID Groups on this array are almost all RAID5 and the read/write ratio averages 60/40 which is a bit write heavy for RAID5 environments, generally speaking.

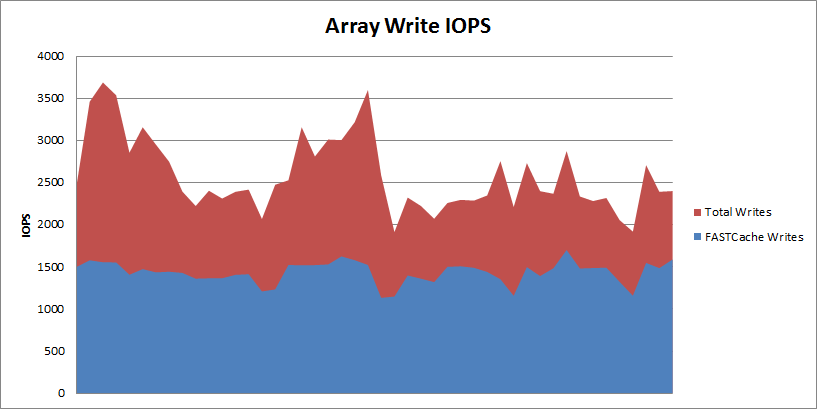

If you’ve done any reading about EMC FASTCache, you probably know that it is a read/write cache. Let’s take a look at the write load of the array and see how much of that write load FASTCache is handling. In the following graph you can see that out of the ~10,000 total IOPS, the array is averaging about 2500-3500 write IOPS with FASTCache handling about 1500 of that total.

That means FASTCache is reducing the back-end writes to disk by about 50% on this system. On the NS480/CX4-480, FASTCache can be configured with up to 800GB usable capacity, so this array could see higher overall performance if needed by augmenting FASTCache further. Installing and upgrading FASTCache is non-disruptive so you can start with a small amount and upgrade later if needed.

FASTVP and FASTCache Together

Next, we’ll drill down to the FASTVP pool which contains 190 total disks (5 x EFD, 170 x FC, and 15 x SATA). There is no maximum number of drives in a Virtual Pool on FLARE30 so this pool could easily be much larger if desired. I’ve graphed the IOPS-per-tier as well as the FASTCache IOPS associated with just this pool in a stacked graph to give an idea of total throughput for the pool as well as the individual tiers.

The pool is servicing between 5,000 and 8,000 IOPS on average which is about half of the total array workload. In case you didn’t already know, FASTVP and FASTCache work together to make sure that data is not duplicated in EFDs. If data has been promoted to the EFD tier in a pool, it will not be promoted to FASTCache, and vise-versa. As a result of this intelligence, FASTCache acceleration is additive to an EFD-enabled FASTVP pool. Here you can see that the EFD tier and FASTCache combined are servicing about 25-40% of the total workload, the FC tier another 40-50%, and the SATA tier services the remaining IOPS. Keep in mind that FASTCache is accelerating IO for other Pools and RAID Group LUNs in addition to this one, so it’s not dedicated to just this pool (although that is configurable.)

The pool is servicing between 5,000 and 8,000 IOPS on average which is about half of the total array workload. In case you didn’t already know, FASTVP and FASTCache work together to make sure that data is not duplicated in EFDs. If data has been promoted to the EFD tier in a pool, it will not be promoted to FASTCache, and vise-versa. As a result of this intelligence, FASTCache acceleration is additive to an EFD-enabled FASTVP pool. Here you can see that the EFD tier and FASTCache combined are servicing about 25-40% of the total workload, the FC tier another 40-50%, and the SATA tier services the remaining IOPS. Keep in mind that FASTCache is accelerating IO for other Pools and RAID Group LUNs in addition to this one, so it’s not dedicated to just this pool (although that is configurable.)

FASTVP IO Distribution

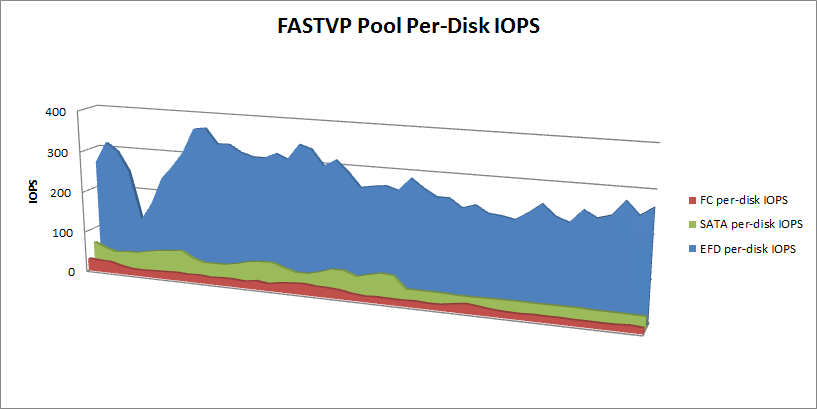

Lastly, to illustrate FASTVP’s effect on IO distribution at the physical disk layer, I’ve broken down IOPS-per-spindle-per-tier for this pool as well. You can see that the FC disks are servicing relatively low IO and have plenty of head room available while the EFD disks, also not being stretched to their limits, are servicing vastly more IOPS per spindle, as expected. The other thing you may have noticed here is that the EFDs are seeing the majority of the workload’s volatility, while the FC and SATA disks have a pretty flat workload over time.  This illustrates that FASTVP has placed the more bursty workloads on EFD where they can be serviced more effectively.

This illustrates that FASTVP has placed the more bursty workloads on EFD where they can be serviced more effectively.

Hopefully you can see here how a very small amount of EFDs used with both FASTCache and FASTVP can relieve a significant portion of the workload from the rest of the disks. FASTCache on this system adds up to only 0.14% of the total data set size and the EFD tier in the FASTVP pool only accounts for 2.6% of the total dataset in that pool.

What do you think of these results? Have you added FASTCache and/or FASTVP to your array? If so, what were your results?

Sudharsan

March 26, 2011 at 8:33 pm

Yes , We had more amazing numbers on our Storage. Some Sharable results had been blogged by me and you can find it in the below link.

http://sudrsn.wordpress.com/2011/03/19/storage-efficiency-with-awesome-fast-cache/

storagesavvy

March 26, 2011 at 8:35 pm

Thanks, actually your blog post is the one that got me inspired to work on mine, your results look good!

xuming

March 27, 2011 at 4:20 am

Hi Richard, Could you tell me the right way to calculate the ratio between read and write of a storage system or an application? If you monitoring a storage system for a month, you calculate the ratio based on one month data or a period of time (like high load period) or the date on some specific point (i.e. the IOPS peak time)?

Best Regard

Xu Ming

storagesavvy

March 27, 2011 at 2:23 pm

That’s a hard question to answer. It really depends on what you are using the data for.. You generally want some sort of average over time and then maybe another average for peak workloads that happen periodically. Size the pool and /or storage array for the general unless the peaks are wildly different. A week or month of data would seem to be more than enough for an average. Generally a day will give you a great idea but you might see weekly or monthly jobs that create significantly different ratios for short periods.

bendiq

March 27, 2011 at 3:22 pm

Hey

Seeing info about IOPS here, but nothing on latency around the writes. Did you gather any statistics on this? The reason I ask is that logically, if using flash as a megacache for writes, write latency can (will) increase so performance will decrease.

Alex McDonald from NetApp did a blog posting to this affect

http://blogs.netapp.com/shadeofblue/2011/01/fast-is-slow-its-the-latency.html

storagesavvy

March 27, 2011 at 3:51 pm

As I mentioned in the text, the overall response time at the front end was avg 2.5ms across the array which is, needless to say, quite respectable. I don’t have before/after data yet to compare what the latency was without FASTCache however. I also remember that blog posting and the one thing that Alex didn’t address was that the higher latency shown with FASTCache was at a much higher IOPS level (over 40% increase over the non-FASTCache load). As we all know latency increases as IOPS increase, all other things equal, so it is a bit of a stretch to say that FASTCache actually caused any latency increase by itself in that test. Further, it’s likely that the second test would have seen much higher latency without FASTCache (ie: failed JetStress) if attempted at the same higher IOPS level.

the storage anarchist

March 28, 2011 at 12:12 pm

bendiq –

Flash is not necessarily slower than HDD on writes, and in fact, the read/write perofrmance of flash devices varies widely across different implementations.

As Sudharsan notes, some flash devices handle random writes better than sequential – this is possibly why NetApp doesn’t do write caching, and why Alex @ NetApp would assert that Flash isn’t good for writes, since WAFL basically changes random writes into sequential destages to storage.

I can assure you that there are Flash drives available today that can outperform HDD on reads, writes AND on mixed read/write workloads of both random and sequential natures.

Aaron Patten

March 28, 2011 at 6:03 pm

All else being equal you will see a decrease in response time for a given load if using FAST Cache. In extremely write intensive environments FAST Cache can dramatically help reduce forced flush events as we can destage hot data to the SSD drives instead of the underlying SAS/FC/SATA disks.

Sudharsan S

March 28, 2011 at 7:54 am

Hi Bendiq – To answer your query , Performance also depends on block size of the data and if the IO is sequential or random ? One our environment , we have found that smaller the block size and random writes perform well and any sequential writes will suffer. I would say disable FAST cache for any luns which has a sequential write pattern. Also remember that number of backend disks would come into picture once write increases and should also be considered along with amount of hot data and size of FAST Cache

Jonas

March 28, 2011 at 10:18 pm

@Alex Mc- keep on blogging about emc on ntap’s site! we love the negative coverage! Helps ntap customers learn about other options in the market. They also wonder why ntap feels the need to talk about it so much – heck it must be good!

@ Richard – u were a great customer to me and now a rockstar here at emc. Keep it up amigo!

storagesavvy

March 28, 2011 at 10:28 pm

@Jonas, thanks for the kind words, it’s been great working with you and this past year at EMC has been amazing.

Mimmus

March 29, 2011 at 1:53 am

How can I estract same graphs for my NS480?

storagesavvy

March 29, 2011 at 8:43 am

I’ve had several people ask me that question and I think I’m going to work up a few blog posts to show how. Generally, I use Navisphere/Unisphere Analyzer to select the data I want, then export it to Excel for graphing.

Performance Analysis for Clariion and VNX – Part 1 «

March 30, 2011 at 8:33 am

[…] me to show them how to get useful data and graphs from their arrays and more recently after posting about FASTCache and FASTVP results I’ve had even more queries on the topic. So I’ve decided to put together a sort of how-to […]

Delano

March 30, 2011 at 7:43 pm

Good write up!

We are going through a similar move to FAST Cache and Fast VP. The planned heterogeneous FAST enabled pool will have FC and SATA disks. EMC’s strong recommendation is to utilize Raid6 the path we will take. I’ve noticed your configuration is RAID5 all the way. Our architects have showed the pros and cons of both however RAID6 gives us that reliability just in case. Thoughts on what you’ve seen in action on Raid6 pools?

Alex Tanner

April 2, 2011 at 12:31 pm

@Delano – Currently pools support only one form of RAID type – so if you want to use R6 you would do so for your FC and EFD tier if present – this presents a high write overhead

EMC’s ‘recommendation’ to use R6 has been discussed in the context of pools and R5 is the norm in a lot of implementations

In terms of pool sizing the – pool can grow to include all the disks bar the Vault area – but performance engineering ‘recommendations’ at this time is to keep pools to around 120 disks

The one limiting element on ramming the CX4 family with EFDs was the ability of the CPUs to keep up – this has changed radically with the new VNX platform and most units have as a design principle the ability to scale almost to full EFD configurations depending on the workload

Jason Burroughs

April 6, 2011 at 5:58 pm

Alex – I hadn’t heard about performance engineering’s recommendation to keep pools at around 120 disks. We have a pool with 140 FC disks. We were thinking of going up to 240 disks. Why 120? I’m sure 140 isn’t too far over the mark, but should we create a new pool for the other drives? We have a pool with 140 FC 15k drives + 15 x 1TB SATA drives + 5 x EFD drives. If we create a whole new pool, we’d have to replicate the multiple tiers to get the same benefits.

Chris Zurich

June 14, 2011 at 10:17 am

10k IOPs seems low IMO… what happens when you double or triple the load to say 50k IOPs? I’m guessing you’ll indicate that the EFDs to back end disk ratio would scale linearly as would the performance? Also, how many IOPs was the old array serving before you migrated over to the new system?

storagesavvy

June 14, 2011 at 10:35 am

Yes, 10K IOPS is not extremely high, it just happens to be what this customer’s environment is driving. Typically you would see the SSD performance scale up with workload, Especially FASTCache. FASTVP is different in that you do need to have enough SSD capacity to fit the hottest blocks into SSD to get the best use. In this example. I believe two things are occurring that actually have reduced the load on the SSD tier. 1.) the SSD tier is not quite large enough for the pool from a pure capacity perspective, just using larger SSDs would help fit more of the hot data in that tier, and 2.) the customer has set tiering policies on a lot of the LUNs based on their perceived importance, but that may not match the actual workload characteristics of the data. I’m working with them to set all of the LUNs in the pool to the same policy.

emcsan

January 17, 2012 at 8:28 am

We implemented FAST VP last year on our NS-960 and the results are very similar to what you noted here. We saw dramatic improvements. Now that we’ve been running it for quite a while, our biggest issue is the very large amount of data that needs to be moved every night with the relocation job. We run it for 8 hours every night, but it consistently says it will take 2-3 days to complete. We never catch up. The obvious answer would be to identify servers that have less of a need for auto-tiering to reduce the amount of data that’s being relocated every night. Would you have any other ideas or recommendations for speeding up the relocation job? Would the data on a specific LUN maintain the same tier distribution forever if auto-tier was disabled for that LUN?

storagesavvy

January 17, 2012 at 8:50 am

I’m glad to hear that you are/were seeing good results! What is the drive makeup of the pool? How much data? What kind of applications?

As far as per-LUN settings, if you set the policy on the LUN to “no movement”, the current distribution at that time will be maintained. The LUN will remain tiered, but will not be relocated during the daily relocation. Taking a look at which LUNs really need FASTVP tiering would be a good idea. If you have any LUNs that could sit in the lowest tier (ie: don’t need any higher performance than the lowest tier provides), then you could set them to Lowest Tier and they will move down and mostly stay there. This will free up space in the higher tiers for other LUNs as well as reduce the amount of data to be relocated over time.

It *may* help to run the relocation more than once a day, break it up into chunks. This can be done with a CLI based script and an external scheduler.

Also, there are some more recent recommendations related to data migration and other batch style activities that may cause unnecessary movement, FASTVP can be effectively paused for periods of time if you have such batch jobs.

You can open an SR about performance and note that your relocations are not finishing.

emcsan

January 17, 2012 at 11:40 am

We have two pools of 102 disks each, both RAID5, each pool has 11 200GB EFD, 70 600GB FC and 21 2TB SATA. They are at about 95% capacity. We average 11,000 Total IO/s throughout the day and about 23,000 at peak. SP utilization averages about 50% for both. The bulk of the IO is from oracle database servers running on P7 IBM hardware on AIX 6.

I looked into altering the relocation schedule with the CLI earlier and I could only find an option to turn it on or off, not to actually change the times or scheduled days for the job. Can you share the command you are referencing? I suppose I could set the job to run 24×7 and use a batch script to simply pause it when I don’t want it to run, if that’s viable.

I’ll definitely open an SR for a more detailed analysis, but I appreciate your comments. Your blog is great, I’ve read lots of useful information already. Thanks again for the reply!

storagesavvy

January 17, 2012 at 12:56 pm

at 95% full you *could* be running into an issue where there isn’t enough free space to effectively move the chunks each night. Support might mention this.

The built-in scheduler only supports one relocation window per day and it’s array wide.

With naviseccli, you can use the “…autotiering -relocation -start….” command and specify the poolid, how long you want it to run, and the rate (low/med/high). You would use CRON or Task Scheduler in a server/PC to issue the commands and build whatever schedule you want. Since the command is pool specific you can offset the relocations of multiple pools, or adjust each pool to it’s own quiet period, etc.

-opstatus for checking the current status of relocation

-stop can be used to stop it before the timer runs out if necessary.

Using this method you can have multiple schedules per day for each pool.

storagesavvy

January 17, 2012 at 1:12 pm

One thing to know.. Using -stop/start resets the counters on the pool, it will clear the list of blocks to be moved and start analysis over again. This can be useful when there is too much data to move to practically complete.

-pause/resume would control the relocation action without reseting the tiering analysis data.

emcsan

January 17, 2012 at 1:18 pm

That’s great information, thank you very much for sharing.

JediMT » Blog Archive » Flash in the VNX – Part 1 – Introduction

August 11, 2012 at 4:23 am

[…] Richard Anderson created an excellent write up of how effective FAST Cache and FAST VP can be with real world data from a customer in his blog at storagesavvy.com. Check it out here: http://storagesavvy.com/2011/03/26/real-world-emc-fastvp-and-fastcache-results/  […]